Exploiting QUIC's Path Validation

QUIC supports connection migration, allowing the client to migrate an established QUIC connection from one path to the other. QUIC’s path validation mechanism can be used to attack the peer and make it consume an unbounded amount of memory. While there have been a number of vulnerabilities in various QUIC implementations, this vulnerability is the first attack against the QUIC protocol itself, i.e. any RFC 9000-compliant implementation is necessarily vulnerable to this attack.

I discovered this denial of service (DoS) vulnerability in October 2023 and disclosed it to the IETF QUIC working group. Several implementations, including my own (quic-go), Cloudflare’s (quiche) and Fastly’s (quicly) were vulnerable to the attack described in this blog post. Other vendors of QUIC implementations were notified as well, but were found to not be affected. This vulnerability was disclosed on December 12, 2023. Affected implementations released fixes and it is recommended to update.

QUIC Path Validation

To understand how the attack works, we first need to dive into QUIC connection migration.

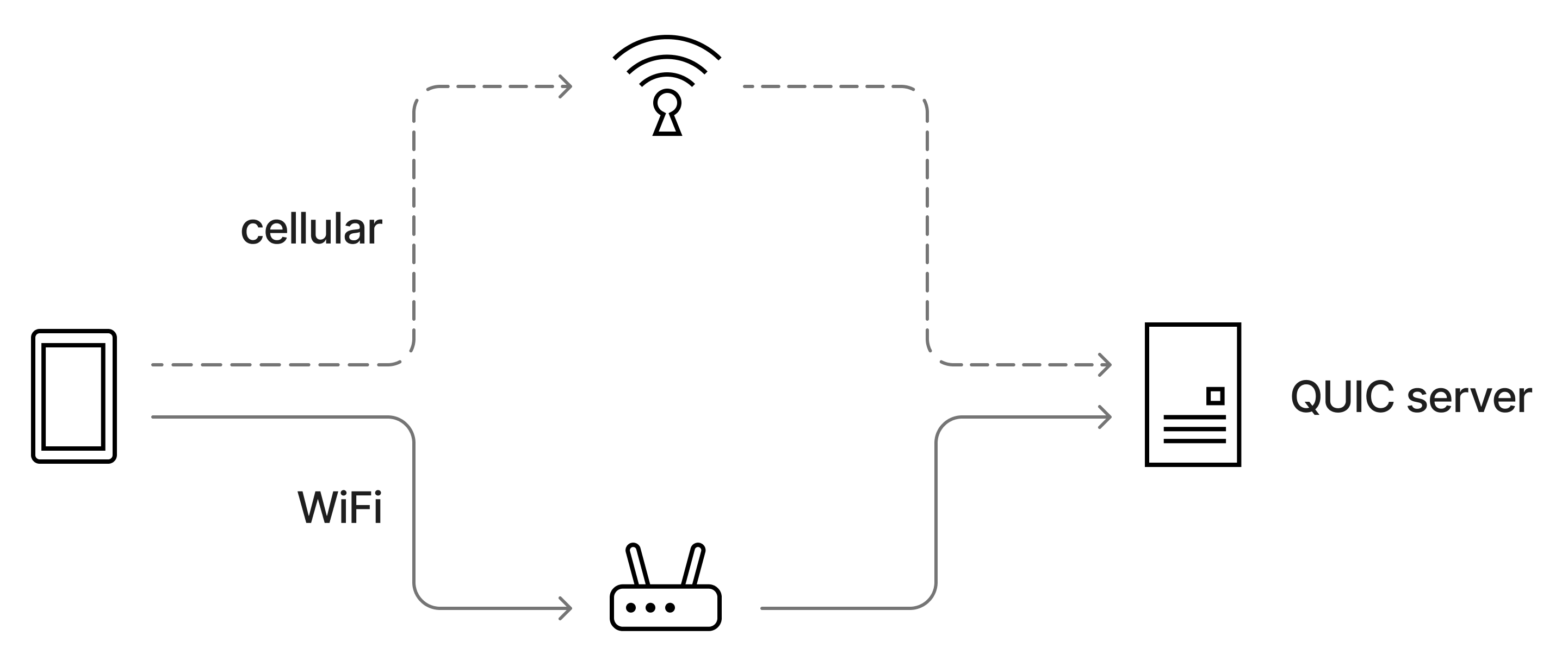

In contrast to TCP, where a connection is identified by the 4-tuple (i.e. the IP:port of both client and server), a QUIC connection can switch to a new 4-tuple over its lifetime. For example, this allows a connection to start on WiFi, and then be migrated to a cellular connection when the user moves away from the WiFi access point. Note that while QUIC supports migrating a connection from one path to the other, at any point in time only a single path is in use to send application data.

The phone has established a QUIC connection to the server via the WiFi link. While that path is still active, it can probe a second path (using the cellular interface) to the server, and switch to this path should the quality of the WiFi path deteriorate.

While exchanging data on one path, the client can initiate “probing” of a new path, i.e. check if that path actually works. This makes it possible to quickly switch to the new path should the old path become unusable. To validate if a new path actually works, the QUIC client sends a packet containing PATH_CHALLENGE frame on the new path (a so-called “probing packet”). The PATH_CHALLENGE frame contains 8 bytes of unpredictable data. When receiving the PATH_CHALLENGE frame, the server is expected to respond to that frame with a PATH_RESPONSE response frame, echoing these 8 bytes of data.

What’s not immediately obvious from the RFC is that the exchange of PATH_CHALLENGE and PATH_RESPONSE frames can be used to determine the round-trip time (RTT) of the new path. In fact, this is the only way to do this, since the acknowledgement for the probe packet will be sent on the old path. The client calculates the RTT by measuring the time between sending a PATH_CHALLENGE and receiving the corresponding PATH_RESPONSE. When probing a new path, it therefore makes sense to send a few probing packets. This makes sure that path validation succeeds, even if one of the packets containing PATH_CHALLENGE or PATH_RESPONSE frames is lost, and it also allows to get a more reliable RTT measurement.

The Attack

A malicious client can use the path validation mechanism to trick the server into building an unbounded queue of PATH_RESPONSE frames, eventually exhausting all its memory. The attacker sends a large number of packets containing PATH_CHALLENGE frames. This forces the victim to send a PATH_RESPONSE in response to every such frame. It’s important to note that these challenges don’t need to be sent on a new path. While the path validation mechanism is primarily intended to probe new paths, it is valid to send PATH_CHALLENGEs on existing paths.

This would not be a problem if the victim was able to send out PATH_RESPONSE frames at the same rate as PATH_CHALLENGE frames are coming in. However, the attacker is effectively in control of the rate at which the victim is able to send new packets. To understand why, we need to take a quick detour into congestion control.

QUIC implementations use a congestion controller to limit the rate at which they send packets into the network. In practice, there are many different congestion control algorithm in use, but virtually all of them react to packet loss by reducing the send rate. This makes sense, since packet loss usually occurs when a queue in one of the routers on the path overflows.

The client can make the victim think that lots of packets were lost by selectively acknowledging packets it received. The server will conclude that the respective packet was lost, and reduce the congestion window. In the case of high enough packet loss, the congestion window is reduced to its minimum value of just 2 packets per RTT, when using the New Reno or Cubic congestion controller.

The attacker’s ability to manipulate the victim’s send rate doesn’t stop here. To reduce the send rate even further, the attacker can make the victim believe that the path has a much longer RTT by only acknowledging packets that were received after a certain time period. For example, the attacker can decide to only acknowledges packets received 1s ago. Assume an actual RTT of 20ms, the victim will now conclude that the RTT is 1020ms, leading to a further reduction of the send rate by a factor of more than 50.

At the same time, the attacker keeps sending a flood of PATH_CHALLENGE frames. The victim now has no choice but to queue an ever-growing number of PATH_RESPONSE frames.

Defending against the Vulnerability

As described above, the RFC requires that each and every PATH_CHALLENGE frame is responded to with a PATH_RESPONSE frame. While it’s useful to send a few PATH_CHALLENGE frames on a new path to obtain an RTT measurement and to guard against packet loss, it’s hard to conceive of a scenario where a huge number of PATH_CHALLENGEs would be beneficial. Under all realistic circumstances, a low single-digit number of PATH_CHALLENGE frames should be sufficient to derive a reasonable RTT estimate and to guard against packet loss on a new path. Furthermore, it’s not expected that a large number of paths would be probed at the same time, not even when using QUIC for NAT Traversal.

If the RFC allowed ignoring an excessive amount of PATH_CHALLENGE frames, implementations could stop responding to a flood of PATH_CHALLENGE frames. This is exactly what we do in our fix for this vulnerabily in quic-go: we limit the number of queued PATH_RESPONSE frames to 256. This is an absolutely enormous amount of PATH_RESPONSE frames, and it’s unlikely that this limit will ever be reached (unless under attack). Since a PATH_RESPONSE frame only consumes 16 bytes of memory, this limits the memory consumption to just 4 kB per QUIC connection, making this attack completely uninteresting for any attacker.

Note that this fix technically makes the implementation non-compliant RFC 9000, albeit under circumstances that will never occur in the real world. Hopefully, this is just a temporary state. Arguably, the wording of the specification should be changed to allow implementation to defend themselves against this DoS attack.

The quic-go project and the QUIC Interop Runner are community-funded projects.

If you find my work useful, please considering sponsoring: